Quick Start

Prerequisites

- Docker (recommended), or

- Rust toolchain (1.75+) for building from source

Run with Docker

docker run -d --name bloop \

-p 5332:5332 \

-v bloop_data:/data \

-e BLOOP__AUTH__HMAC_SECRET=your-secret-here \

ghcr.io/jaikoo/bloop:latestBuild from Source

git clone https://github.com/jaikoo/bloop.git

cd bloop

cargo build --release

./target/release/bloop --config config.tomlTo include the optional analytics engine (DuckDB-powered Insights tab):

cargo build --release --features analyticsSend Your First Error

# Your project API key (from Settings → Projects)

API_KEY="bloop_abc123..."

# Compute HMAC signature using the project key

BODY='{"timestamp":1700000000,"source":"api","environment":"production","release":"1.0.0","error_type":"RuntimeError","message":"Something went wrong"}'

SIG=$(echo -n "$BODY" | openssl dgst -sha256 -hmac "$API_KEY" | awk '{print $2}')

# Send to bloop

curl -X POST http://localhost:5332/v1/ingest \

-H "Content-Type: application/json" \

-H "X-Project-Key: $API_KEY" \

-H "X-Signature: $SIG" \

-d "$BODY"Open http://localhost:5332 in your browser, register a passkey, and view your error on the dashboard.

Configuration

Bloop reads from config.toml in the working directory. Every value can be overridden via environment variables using double-underscore separators: BLOOP__SECTION__KEY.

Full Reference

# ── Server ──

[server]

host = "0.0.0.0"

port = 5332

# ── Database ──

[database]

path = "bloop.db" # SQLite file path

pool_size = 4 # deadpool-sqlite connections

# ── Ingestion ──

[ingest]

max_payload_bytes = 32768 # Max single request body

max_stack_bytes = 8192 # Max stack trace length

max_metadata_bytes = 4096 # Max metadata JSON size

max_message_bytes = 2048 # Max error message length

max_batch_size = 50 # Max events per batch request

channel_capacity = 8192 # MPSC channel buffer size

# ── Pipeline ──

[pipeline]

flush_interval_secs = 2 # Flush timer

flush_batch_size = 500 # Events per batch write

sample_reservoir_size = 5 # Sample occurrences kept per fingerprint

# ── Retention ──

[retention]

raw_events_days = 7 # Raw event TTL

prune_interval_secs = 3600 # How often to run cleanup

# ── Auth ──

[auth]

hmac_secret = "change-me-in-production"

rp_id = "localhost" # WebAuthn relying party ID

rp_origin = "http://localhost:5332" # WebAuthn origin

session_ttl_secs = 604800 # Session lifetime (7 days)

# ── Rate Limiting ──

[rate_limit]

per_second = 100

burst_size = 200

# ── Alerting ──

[alerting]

cooldown_secs = 900 # Min seconds between re-fires

# ── SMTP (for email alerts) ──

[smtp]

enabled = false

host = "smtp.example.com"

port = 587

username = ""

password = ""

from = "bloop@example.com"

starttls = trueEnvironment Variables

| Variable | Overrides | Example |

|---|---|---|

BLOOP__SERVER__PORT | server.port | 8080 |

BLOOP__DATABASE__PATH | database.path | /data/bloop.db |

BLOOP__AUTH__HMAC_SECRET | auth.hmac_secret | my-production-secret |

BLOOP__AUTH__RP_ID | auth.rp_id | errors.myapp.com |

BLOOP__AUTH__RP_ORIGIN | auth.rp_origin | https://errors.myapp.com |

BLOOP_SLACK_WEBHOOK_URL | (direct) | Slack incoming webhook URL |

BLOOP_WEBHOOK_URL | (direct) | Generic webhook URL |

Note: BLOOP_SLACK_WEBHOOK_URL and BLOOP_WEBHOOK_URL are read directly from the environment (not through the config system), so they use single underscores.

Architecture

Bloop is a single async Rust process. All components run as Tokio tasks within one binary.

Storage Layers

| Layer | Retention | Purpose |

|---|---|---|

| Raw events | 7 days (configurable) | Full event payloads for debugging |

| Aggregates | Indefinite | Error counts, first/last seen, status |

| Sample reservoir | Indefinite | 5 sample occurrences per fingerprint |

Fingerprinting

Every ingested error gets a deterministic fingerprint. The algorithm:

- Normalize the message: strip UUIDs → strip IPs → strip all numbers → lowercase

- Extract top stack frame: skip framework frames (UIKitCore, node_modules, etc.), strip line numbers

- Hash:

xxhash3(source + error_type + route + normalized_message + top_frame)

This means "Connection refused at 10.0.0.1:5432" and "Connection refused at 192.168.1.2:3306" produce the same fingerprint. You can also supply your own fingerprint field to override.

Backpressure

The ingestion handler pushes events into a bounded MPSC channel (default capacity: 8192). If the channel is full:

- The event is dropped

- The client still receives

200 OK - A warning is logged server-side

Bloop never returns 429 to your clients. Mobile apps and APIs should not retry errors — if the buffer is full, the event wasn't critical enough to block on.

SDK: TypeScript / Node.js

IngestEvent Payload

interface IngestEvent {

timestamp: number; // Unix epoch seconds

source: "ios" | "android" | "api";

environment: string; // "production", "staging", etc.

release: string; // Semver or build ID

error_type: string; // Exception class name

message: string; // Error message

app_version?: string; // Display version

build_number?: string; // Build number

route_or_procedure?: string; // API route or RPC method

screen?: string; // Mobile screen name

stack?: string; // Stack trace

http_status?: number; // HTTP status code

request_id?: string; // Correlation ID

user_id_hash?: string; // Hashed user identifier

device_id_hash?: string; // Hashed device identifier

fingerprint?: string; // Custom fingerprint (overrides auto)

metadata?: Record<string, unknown>; // Arbitrary extra data

}Option A: Install the SDK

npm install @bloop/sdkimport { BloopClient } from "@bloop/sdk";

const bloop = new BloopClient({

endpoint: "https://errors.myapp.com",

projectKey: "bloop_abc123...", // From project settings

environment: "production",

release: "1.2.0",

});

// Install global handlers to catch uncaught exceptions & unhandled rejections

bloop.installGlobalHandlers();

// Capture an Error object

try {

riskyOperation();

} catch (err) {

bloop.captureError(err, {

route: "POST /api/users",

httpStatus: 500,

});

}

// Capture a structured event

bloop.capture({

errorType: "ValidationError",

message: "Invalid email format",

route: "POST /api/users",

httpStatus: 422,

});

// Express middleware

import express from "express";

const app = express();

app.use(bloop.errorMiddleware());

// Flush on shutdown

await bloop.shutdown();Option B: Minimal Example (Zero Dependencies)

async function sendToBloop(

endpoint: string,

projectKey: string,

event: Record<string, unknown>,

) {

const body = JSON.stringify(event);

const encoder = new TextEncoder();

const key = await crypto.subtle.importKey(

"raw", encoder.encode(projectKey),

{ name: "HMAC", hash: "SHA-256" }, false, ["sign"],

);

const sig = await crypto.subtle.sign("HMAC", key, encoder.encode(body));

const hex = [...new Uint8Array(sig)]

.map(b => b.toString(16).padStart(2, "0")).join("");

await fetch(`${endpoint}/v1/ingest`, {

method: "POST",

headers: {

"Content-Type": "application/json",

"X-Project-Key": projectKey,

"X-Signature": hex,

},

body,

});

}

// Usage

await sendToBloop("https://errors.myapp.com", "bloop_abc123...", {

timestamp: Math.floor(Date.now() / 1000),

source: "api",

environment: "production",

release: "1.2.0",

error_type: "TypeError",

message: "Cannot read property of undefined",

});Features (SDK)

- installGlobalHandlers — Captures uncaught exceptions and unhandled promise rejections automatically. Works in both Node.js (

process.on("uncaughtException")) and browser (window.addEventListener("error")) environments. - Express middleware —

errorMiddleware()drops into your Express app and captures all unhandled request errors with route and status metadata. - Batched delivery — Events are buffered and sent in batches automatically.

- Web Crypto HMAC — Works in both Node.js and browser environments.

Events captured by global handlers are tagged with extra metadata so you can distinguish them from manually captured errors:

// Automatically added to metadata:

{

metadata: {

unhandled: true,

mechanism: "uncaughtException" // or "unhandledRejection"

}

}@bloop/sdk uses the Web Crypto API internally, so it works in both Node.js and browser environments. Use installGlobalHandlers() to catch errors you might otherwise miss.

SDK: Swift (iOS)

Option A: Install the SDK

Add to your Package.swift dependencies or via Xcode → File → Add Package Dependencies:

.package(url: "https://github.com/jaikoo/bloop-swift.git", from: "0.4.0")import Bloop

// Configure once at app launch (e.g. in AppDelegate or @main App.init)

BloopClient.configure(

endpoint: "https://errors.myapp.com",

secret: "your-hmac-secret",

projectKey: "bloop_abc123...", // From Settings → Projects

environment: "production",

release: "2.1.0"

)

// Install crash handler (captures SIGABRT, SIGSEGV, etc.)

BloopClient.shared?.installCrashHandler()

// Install lifecycle handlers (flush on background/terminate)

BloopClient.shared?.installLifecycleHandlers()

// Capture an error manually

do {

try riskyOperation()

} catch {

BloopClient.shared?.capture(

error: error,

screen: "HomeViewController"

)

}

// Capture a structured event

BloopClient.shared?.capture(

errorType: "NetworkError",

message: "Request timed out",

screen: "HomeViewController"

)

// Synchronous flush (e.g. before a crash report is sent)

BloopClient.shared?.flushSync()

// Close the client (flushes remaining events)

BloopClient.shared?.close()Option B: Minimal Example (Zero Dependencies)

import Foundation

import CommonCrypto

struct BloopClient {

let url: URL

let projectKey: String // From Settings → Projects

func send(event: [String: Any]) async throws {

let body = try JSONSerialization.data(withJSONObject: event)

let signature = hmacSHA256(data: body, key: projectKey)

var request = URLRequest(url: url.appendingPathComponent("/v1/ingest"))

request.httpMethod = "POST"

request.setValue("application/json", forHTTPHeaderField: "Content-Type")

request.setValue(projectKey, forHTTPHeaderField: "X-Project-Key")

request.setValue(signature, forHTTPHeaderField: "X-Signature")

request.httpBody = body

let (_, response) = try await URLSession.shared.data(for: request)

guard let http = response as? HTTPURLResponse,

http.statusCode == 200 else {

return // Fire and forget — don't crash the app

}

}

private func hmacSHA256(data: Data, key: String) -> String {

let keyData = key.data(using: .utf8)!

var digest = [UInt8](repeating: 0, count: Int(CC_SHA256_DIGEST_LENGTH))

keyData.withUnsafeBytes { keyBytes in

data.withUnsafeBytes { dataBytes in

CCHmac(CCHmacAlgorithm(kCCHmacAlgSHA256),

keyBytes.baseAddress, keyData.count,

dataBytes.baseAddress, data.count,

&digest)

}

}

return digest.map { String(format: "%02x", $0) }.joined()

}

}

// Usage

let client = BloopClient(

url: URL(string: "https://errors.myapp.com")!,

projectKey: "bloop_abc123..."

)

try await client.send(event: [

"timestamp": Int(Date().timeIntervalSince1970),

"source": "ios",

"environment": "production",

"release": "2.1.0",

"error_type": "NetworkError",

"message": "Request timed out",

"screen": "HomeViewController",

])Features (SDK)

- Crash handler —

installCrashHandler()registers signal handlers forSIGABRT,SIGSEGV,SIGBUS,SIGFPE, andSIGTRAP. Captured crashes are persisted to disk and sent on next launch. - Lifecycle handlers —

installLifecycleHandlers()observesUIApplicationnotifications to automatically flush events when the app enters the background or is about to terminate. - Synchronous flush —

flushSync()blocks the calling thread until all buffered events are sent. Useful in crash handlers orapplicationWillTerminate. - Device info — Automatically enriches events with device model, OS version, and app version/build number from the main bundle.

- HMAC signing — All requests are signed with HMAC-SHA256 using

CryptoKit. - Close —

close()flushes any remaining events and releases resources. Safe to call multiple times.

Call installCrashHandler() as early as possible in your app launch sequence — before any other crash reporting SDKs. Only one signal handler can be active per signal.

SDK: Kotlin (Android)

github.com/jaikoo/bloop-kotlin

Option A: Install the SDK

implementation("com.jaikoo.bloop:bloop-client:0.1.0")import com.jaikoo.bloop.BloopClient

// Configure once in Application.onCreate()

BloopClient.configure(

endpoint = "https://errors.myapp.com",

secret = "your-hmac-secret",

projectKey = "bloop_abc123...", // From Settings → Projects

environment = "production",

release = "3.0.1",

)

// Install uncaught exception handler

BloopClient.shared?.installUncaughtExceptionHandler()

// Capture a throwable

try {

riskyOperation()

} catch (e: Exception) {

BloopClient.shared?.capture(e, screen = "ProfileFragment")

}

// Capture a structured event

BloopClient.shared?.capture(

errorType = "IllegalStateException",

message = "Fragment not attached to activity",

screen = "ProfileFragment",

)

// Synchronous flush (e.g. before process death)

BloopClient.shared?.flushSync()

// Async flush

BloopClient.shared?.flush()Option B: Minimal Example (Zero Dependencies)

import okhttp3.*

import okhttp3.MediaType.Companion.toMediaType

import okhttp3.RequestBody.Companion.toRequestBody

import org.json.JSONObject

import javax.crypto.Mac

import javax.crypto.spec.SecretKeySpec

class BloopClient(

private val baseUrl: String,

private val projectKey: String, // From Settings → Projects

) {

private val client = OkHttpClient()

private val json = "application/json".toMediaType()

fun send(event: JSONObject) {

val body = event.toString()

val signature = hmacSha256(body, projectKey)

val request = Request.Builder()

.url("$baseUrl/v1/ingest")

.post(body.toRequestBody(json))

.addHeader("X-Project-Key", projectKey)

.addHeader("X-Signature", signature)

.build()

// Fire and forget on background thread

client.newCall(request).enqueue(object : Callback {

override fun onFailure(call: Call, e: IOException) {}

override fun onResponse(call: Call, response: Response) {

response.close()

}

})

}

private fun hmacSha256(data: String, key: String): String {

val mac = Mac.getInstance("HmacSHA256")

mac.init(SecretKeySpec(key.toByteArray(), "HmacSHA256"))

return mac.doFinal(data.toByteArray())

.joinToString("") { "%02x".format(it) }

}

}

// Usage

val bloop = BloopClient("https://errors.myapp.com", "bloop_abc123...")

bloop.send(JSONObject().apply {

put("timestamp", System.currentTimeMillis() / 1000)

put("source", "android")

put("environment", "production")

put("release", "3.0.1")

put("error_type", "IllegalStateException")

put("message", "Fragment not attached to activity")

put("screen", "ProfileFragment")

})Features (SDK)

- Device enrichment — Automatically adds device model (

Build.MODEL), manufacturer, brand, OS version (Build.VERSION.RELEASE), and API level via reflection. Falls back to JVM system properties on non-Android platforms. Opt out withenrichDevice = false. - Uncaught exception handler —

installUncaughtExceptionHandler()wrapsThread.setDefaultUncaughtExceptionHandlerto capture crashes. Chains to any previously installed handler so other crash reporters still work. - flush / flushSync —

flush()sends buffered events asynchronously.flushSync()blocks until all events are sent — use it inonTrimMemoryor before calling the previous uncaught exception handler. - HMAC signing — All requests are signed with HMAC-SHA256 via

javax.crypto.Mac. - Thread-safe buffering — Events are buffered in a

ConcurrentLinkedQueueand flushed on a scheduled background thread (default: 5 seconds).

Call installUncaughtExceptionHandler() after any other crash SDKs (e.g. Firebase Crashlytics) so bloop captures first and then chains to the previous handler.

SDK: Python

github.com/jaikoo/bloop-python

Option A: Install the SDK

pip install bloop-sdkfrom bloop import BloopClient

# Initialize — auto-captures uncaught exceptions via sys.excepthook

client = BloopClient(

endpoint="https://errors.myapp.com",

project_key="bloop_abc123...", # From Settings → Projects

environment="production",

release="1.0.0",

)

# Capture an exception with full traceback

try:

risky_operation()

except Exception as e:

client.capture_exception(e,

route_or_procedure="POST /api/process",

)

# Extracts: error_type, message, and full stack trace automatically

# Capture a structured event (no exception object needed)

client.capture(

error_type="ValidationError",

message="Invalid email format",

route_or_procedure="POST /api/users",

)

# Context manager for graceful shutdown

with BloopClient(endpoint="...", project_key="...") as bloop:

bloop.capture(error_type="TestError", message="Hello")

# flush + close happen automaticallyOption B: Minimal Example (Zero Dependencies)

import hmac, hashlib, json, time

from urllib.request import Request, urlopen

def send_to_bloop(endpoint, project_key, event):

body = json.dumps(event).encode()

sig = hmac.new(

project_key.encode(), body, hashlib.sha256

).hexdigest()

req = Request(

f"{endpoint}/v1/ingest",

data=body,

headers={

"Content-Type": "application/json",

"X-Project-Key": project_key,

"X-Signature": sig,

},

)

urlopen(req)

# Usage

send_to_bloop("https://errors.myapp.com", "bloop_abc123...", {

"timestamp": int(time.time()),

"source": "api",

"environment": "production",

"release": "1.0.0",

"error_type": "ValueError",

"message": "Invalid input",

})Features (SDK)

- Zero dependencies — Uses only Python stdlib (

hmac,hashlib,json,urllib.request,threading) - capture_exception — Pass any exception object to

capture_exception(e)and it automatically extracts the error type, message, and full stack trace viatraceback.format_exception - Auto-capture (sys.excepthook) — Installs a

sys.excepthookhandler to capture uncaught exceptions in the main thread automatically - Thread crash capture — Installs a

threading.excepthookhandler to capture uncaught exceptions in spawned threads (Python 3.8+) - atexit auto-flush — Registers an

atexithandler to flush all buffered events before the process exits, so nothing is lost on clean shutdown - Thread-safe buffering — Events are buffered and flushed in batches on a background timer (default: 5 seconds)

- Context manager — Use

with BloopClient(...) as bloop:for automatic flush and close on exit - HMAC signing — All requests are signed with HMAC-SHA256 using your project key

SDK: Ruby

Option A: Install the SDK

gem install blooprequire "bloop"

client = Bloop::Client.new(

endpoint: "https://errors.myapp.com",

project_key: "bloop_abc123...", # From Settings → Projects

environment: "production",

release: "1.0.0",

)

# Capture an exception

begin

risky_operation

rescue => e

client.capture_exception(e, route_or_procedure: "POST /api/orders")

end

# Structured event capture

client.capture(

error_type: "ValidationError",

message: "Invalid email format",

route_or_procedure: "POST /api/users",

)

# Block-based error capture

client.with_error_capture(route_or_procedure: "POST /api/checkout") do

process_payment

end

# If process_payment raises, the exception is captured and re-raised

# Graceful shutdown

client.closeRack Middleware

Automatically capture all unhandled exceptions in your web application:

# Rails — config/application.rb

require "bloop"

BLOOP_CLIENT = Bloop::Client.new(

endpoint: "https://errors.myapp.com",

project_key: "bloop_abc123...",

environment: Rails.env,

release: "1.0.0",

)

config.middleware.use Bloop::RackMiddleware, client: BLOOP_CLIENT# Sinatra

require "bloop"

client = Bloop::Client.new(

endpoint: "https://errors.myapp.com",

project_key: "bloop_abc123...",

environment: "production",

release: "1.0.0",

)

use Bloop::RackMiddleware, client: clientThe middleware captures exceptions, enriches them with the request path as route_or_procedure and the HTTP status as http_status, then re-raises the exception so your normal error handling still works.

Option B: Minimal Example (Zero Dependencies)

require "net/http"

require "json"

require "openssl"

def send_to_bloop(endpoint, project_key, event)

body = event.to_json

sig = OpenSSL::HMAC.hexdigest("SHA256", project_key, body)

uri = URI("#{endpoint}/v1/ingest")

req = Net::HTTP::Post.new(uri)

req["Content-Type"] = "application/json"

req["X-Project-Key"] = project_key

req["X-Signature"] = sig

req.body = body

Net::HTTP.start(uri.host, uri.port, use_ssl: uri.scheme == "https") do |http|

http.request(req)

end

end

# Usage

send_to_bloop("https://errors.myapp.com", "bloop_abc123...", {

timestamp: Time.now.to_i,

source: "api",

environment: "production",

release: "1.0.0",

error_type: "RuntimeError",

message: "Something went wrong",

})Features (SDK)

- Zero dependencies — Uses only Ruby stdlib (

openssl,net/http,json) - Auto-capture — Registers an

at_exithook to flush events and capture unhandled exceptions - Thread-safe buffering — Events are buffered with Mutex protection and flushed on a background thread (default: 5 seconds)

- capture_exception — Helper method that extracts error type, message, and backtrace from a Ruby exception

- with_error_capture — Block-based helper that wraps a block in a

begin/rescue, captures the exception, and re-raises it. Useful for wrapping individual operations without cluttering your code with rescue blocks. - Rack middleware —

Bloop::RackMiddlewaredrops into any Rack-compatible app (Rails, Sinatra, Hanami, etc.) and captures all unhandled request exceptions with route and status metadata - HMAC signing — All requests are signed with HMAC-SHA256 via

OpenSSL::HMAC

SDK: React Native

github.com/jaikoo/bloop-react-native

Option A: Install the SDK

npm install @bloop/react-nativeSetup with Hook

import { useBloop, BloopErrorBoundary } from "@bloop/react-native";

function App() {

const bloop = useBloop({

endpoint: "https://errors.myapp.com",

projectKey: "bloop_abc123...",

environment: "production",

release: "1.0.0",

appVersion: "1.0.0",

buildNumber: "42",

});

// useBloop automatically installs all three global handlers:

// 1. ErrorUtils global handler (uncaught JS exceptions)

// 2. Promise rejection handler

// 3. AppState handler (flushes on background/inactive)

return (

<BloopErrorBoundary

client={bloop}

fallback={<ErrorScreen />}

>

<Navigation />

</BloopErrorBoundary>

);

}Production Handlers

The useBloop hook installs three handlers automatically on mount (and cleans them up on unmount). You can also install them manually if you are not using the hook:

import { BloopRNClient } from "@bloop/react-native";

const bloop = new BloopRNClient({ /* ... */ });

// Flushes buffered events when the app goes to background or inactive

bloop.installAppStateHandler();

// Captures unhandled promise rejections as error events

bloop.installPromiseRejectionHandler();

// Clean up all handlers when done

await bloop.shutdown();- installAppStateHandler — Listens to React Native

AppStatechanges. When the app transitions to"background"or"inactive", all buffered events are flushed immediately so nothing is lost if the OS kills the process. - installPromiseRejectionHandler — Hooks into React Native's global

Promiserejection tracking. Unhandled rejections are captured withmetadata.mechanism: "unhandledRejection"andmetadata.unhandled: true.

Option B: Inline Alternative

@bloop/react-native wraps @bloop/sdk with React Native-specific features (platform detection, ErrorUtils handler, AppState flush, promise rejection tracking). A zero-dependency inline approach is not practical here — install @bloop/sdk directly if you only need the core client without React Native hooks.

Features (SDK)

- useBloop hook — Creates and manages the client lifecycle. Automatically installs all three global handlers (ErrorUtils, promise rejection, AppState) on mount and cleans up on unmount.

- BloopErrorBoundary — React error boundary that automatically captures render errors. Supports a

fallbackprop (component or render function) and anonErrorcallback. - AppState flush — Automatically flushes events when the app backgrounds, so events are not lost to OS process termination.

- Promise rejection capture — Unhandled promise rejections are captured automatically with full error details.

- Platform detection — Automatically sets

sourceto"ios"or"android"based onPlatform.OS. - Manual capture — Use

bloop.captureError(error)for caught exceptions.

@bloop/react-native wraps @bloop/sdk with React Native-specific features: automatic platform detection, ErrorUtils global handler, promise rejection tracking, AppState-based flush, and app version/build number metadata.

API Reference

Endpoints

| Method | Path | Auth | Description |

|---|---|---|---|

| GET | /health |

None | Health check (DB status, buffer usage) |

| POST | /v1/ingest |

HMAC | Ingest a single error event |

| POST | /v1/ingest/batch |

HMAC | Ingest up to 50 events |

| GET | /v1/errors |

Bearer / Session | List aggregated errors |

| GET | /v1/errors/{fingerprint} |

Bearer / Session | Get error detail |

| GET | /v1/errors/{fingerprint}/occurrences |

Bearer / Session | List sample occurrences |

| POST | /v1/errors/{fingerprint}/resolve |

Bearer / Session | Mark error as resolved |

| POST | /v1/errors/{fingerprint}/ignore |

Bearer / Session | Mark error as ignored |

| POST | /v1/errors/{fingerprint}/mute |

Bearer / Session | Mute an error (suppress alerts) |

| POST | /v1/errors/{fingerprint}/unresolve |

Bearer / Session | Unresolve an error |

| GET | /v1/errors/{fingerprint}/trend |

Bearer / Session | Per-error hourly counts |

| GET | /v1/errors/{fingerprint}/history |

Bearer / Session | Status change audit trail |

| GET | /v1/releases/{release}/errors |

Bearer / Session | Errors for a specific release |

| GET | /v1/trends |

Bearer / Session | Global hourly event counts |

| GET | /v1/stats |

Bearer / Session | Overview stats (totals, top routes) |

| GET | /v1/alerts |

Bearer / Session | List alert rules |

| POST | /v1/alerts |

Bearer / Session | Create alert rule |

| PUT | /v1/alerts/{id} |

Bearer / Session | Update alert rule |

| DELETE | /v1/alerts/{id} |

Bearer / Session | Delete alert rule |

| POST | /v1/alerts/{id}/channels |

Bearer / Session | Add notification channel |

| POST | /v1/alerts/{id}/test |

Bearer / Session | Send test notification |

| GET | /v1/projects |

Session | List projects |

| POST | /v1/projects |

Session | Create project |

| GET | /v1/projects/{slug} |

Session | Get project details |

| POST | /v1/projects/{slug}/sourcemaps |

Bearer / Session | Upload source map (multipart) |

| GET | /v1/projects/{slug}/sourcemaps |

Bearer / Session | List source maps |

| POST | /v1/tokens |

Session | Create API token |

| GET | /v1/tokens |

Session | List tokens for a project |

| DELETE | /v1/tokens/{id} |

Session | Revoke a token |

| GET | /v1/analytics/spikes |

Bearer / Session | Spike detection (z-score) |

| GET | /v1/analytics/movers |

Bearer / Session | Top movers (period-over-period) |

| GET | /v1/analytics/correlations |

Bearer / Session | Correlated error pairs |

| GET | /v1/analytics/releases |

Bearer / Session | Release impact scoring |

| GET | /v1/analytics/environments |

Bearer / Session | Environment breakdown |

| PUT | /v1/admin/users/{user_id}/role |

Admin Session | Promote or demote a user |

| GET | /v1/admin/retention |

Admin Session | Get retention config + storage stats |

| PUT | /v1/admin/retention |

Admin Session | Update retention settings |

| POST | /v1/admin/retention/purge |

Admin Session | Trigger immediate data purge |

IngestEvent Schema

| Field | Type | Required | Description |

|---|---|---|---|

timestamp | integer | Yes | Unix epoch seconds |

source | string | Yes | "ios", "android", or "api" |

environment | string | Yes | Deployment environment |

release | string | Yes | Release version or build ID |

error_type | string | Yes | Exception class or error category |

message | string | Yes | Error message (max 2048 bytes) |

app_version | string | No | Display version string |

build_number | string | No | Build number |

route_or_procedure | string | No | API route or RPC method |

screen | string | No | Mobile screen / view name |

stack | string | No | Stack trace (max 8192 bytes) |

http_status | integer | No | HTTP status code |

request_id | string | No | Correlation/trace ID |

user_id_hash | string | No | Hashed user identifier |

device_id_hash | string | No | Hashed device identifier |

fingerprint | string | No | Custom fingerprint (skips auto-generation) |

metadata | object | No | Arbitrary JSON (max 4096 bytes) |

Query Parameters for /v1/errors

| Param | Type | Description |

|---|---|---|

release | string | Filter by release version |

environment | string | Filter by environment |

source | string | Filter by source (ios, android, api) |

route | string | Filter by route/procedure |

status | string | Filter by status (active, resolved, ignored) |

since | integer | Unix timestamp lower bound |

until | integer | Unix timestamp upper bound |

sort | string | Sort field |

limit | integer | Results per page (default: 50, max: 200) |

offset | integer | Pagination offset |

Response: Error List /v1/errors

[

{

"fingerprint": "a1b2c3d4e5f6",

"project_id": "proj_abc123",

"release": "1.2.0",

"environment": "production",

"error_type": "RuntimeError",

"message": "connection refused at ::",

"source": "api",

"total_count": 42,

"first_seen": 1700000000000,

"last_seen": 1700086400000,

"status": "unresolved"

}

]Response: Error Detail /v1/errors/{fingerprint}

{

"aggregates": [

{

"fingerprint": "a1b2c3d4",

"release": "1.2.0",

"environment": "production",

"total_count": 42,

"status": "unresolved",

"first_seen": 1700000000000,

"last_seen": 1700086400000

}

],

"samples": [

{

"source": "api",

"release": "1.2.0",

"stack": "Error: connection refused\n at fetch (/app/src/api.js:42:5)",

"metadata": {},

"captured_at": 1700086400000,

"deobfuscated_stack": [

{

"raw_frame": " at fetch (/app/dist/bundle.min.js:1:2345)",

"original_file": "src/api.ts",

"original_line": 42,

"original_column": 5,

"original_name": "fetchData"

}

]

}

]

}deobfuscated_stack is only present on samples when source maps are uploaded for the matching project and release. Each frame includes the original file, line, column, and function name when available.

Response: Trends /v1/trends

[

{ "hour_bucket": 1700006400000, "count": 12 },

{ "hour_bucket": 1700010000000, "count": 5 },

{ "hour_bucket": 1700013600000, "count": 23 }

]Each entry is an hourly bucket (Unix ms). Query with ?hours=72 (default) and optional project_id.

API Tokens

API tokens provide scoped, long-lived access for CI pipelines, AI agents, and programmatic integrations. Tokens use the Authorization: Bearer header and are always scoped to a single project.

Token Format

- Prefix:

bloop_tk_followed by 43 base64url characters (256-bit entropy) - Storage: SHA-256 hashed in the database — the plaintext is shown exactly once on creation

- Caching: Resolved tokens are cached in memory (5-minute TTL) for fast lookups

Scopes

| Scope | Grants access to |

|---|---|

errors:read | List/get errors, occurrences, trends, stats |

errors:write | Resolve, ignore, mute, unresolve errors |

sourcemaps:read | List source maps |

sourcemaps:write | Upload and delete source maps |

alerts:read | List alert rules and channels |

alerts:write | Create, update, delete rules and channels |

Creating a Token

Create tokens from the dashboard (Settings → API Tokens) or via the API:

curl -X POST http://localhost:5332/v1/tokens \

-H "Content-Type: application/json" \

-H "Cookie: bloop_session=YOUR_SESSION_TOKEN" \

-d '{

"name": "CI Pipeline",

"project_id": "PROJECT_UUID",

"scopes": ["errors:read", "sourcemaps:write"],

"expires_in_days": 90

}'The response includes the plaintext token — copy it immediately, it will never be shown again:

{

"id": "tok_abc123",

"name": "CI Pipeline",

"token": "bloop_tk_aBcDeFgHiJk...",

"token_prefix": "bloop_tk_aBcDeFg",

"project_id": "PROJECT_UUID",

"scopes": ["errors:read", "sourcemaps:write"],

"created_at": 1700000000,

"expires_at": 1707776000

}Using a Token

Pass the token in the Authorization header:

# List errors for the token's project

curl -H "Authorization: Bearer bloop_tk_aBcDeFgHiJk..." \

https://errors.myapp.com/v1/errors

# Resolve an error (requires errors:write scope)

curl -X POST -H "Authorization: Bearer bloop_tk_aBcDeFgHiJk..." \

https://errors.myapp.com/v1/errors/FINGERPRINT/resolve

# Upload a source map (requires sourcemaps:write scope)

curl -X POST -H "Authorization: Bearer bloop_tk_aBcDeFgHiJk..." \

-F "release=1.2.0" -F "file=@dist/app.js.map" \

https://errors.myapp.com/v1/projects/my-app/sourcemapsBearer tokens are project-scoped — you don't need to pass a project_id query parameter. The project is determined automatically from the token. If a token lacks the required scope, the request returns 403 Forbidden.

Managing Tokens

# List tokens for a project (never shows the plaintext token)

curl -H "Cookie: bloop_session=YOUR_SESSION_TOKEN" \

"https://errors.myapp.com/v1/tokens?project_id=PROJECT_UUID"

# Revoke a token

curl -X DELETE -H "Cookie: bloop_session=YOUR_SESSION_TOKEN" \

https://errors.myapp.com/v1/tokens/TOKEN_IDAuth Modes Summary

| Mode | Header | Use case |

|---|---|---|

| HMAC (ingest) | X-Project-Key + X-Signature | SDKs sending error events |

| Bearer token | Authorization: Bearer bloop_tk_... | CI, agents, scripts reading/managing errors |

| Session cookie | Cookie: bloop_session=... | Dashboard (browser) |

Alerting

Bloop supports alert rules that fire webhooks when conditions are met.

Alert Rule Types

| Type | Fires when | Config fields |

|---|---|---|

new_issue |

A new fingerprint is seen for the first time | environment (optional filter) |

threshold |

Error count exceeds N in a time window | fingerprint, route, threshold, window_secs |

spike |

Error rate spikes vs rolling baseline | multiplier, baseline_window_secs, compare_window_secs |

Create a Rule

curl -X POST http://localhost:5332/v1/alerts \

-H "Content-Type: application/json" \

-H "Cookie: session=YOUR_SESSION_TOKEN" \

-d '{

"name": "New production issues",

"config": {

"type": "new_issue",

"environment": "production"

}

}'Webhook Payload

When an alert fires, Bloop sends a POST to the configured webhook URLs with:

{

"alert_rule": "New production issues",

"fingerprint": "a1b2c3d4e5f6",

"error_type": "RuntimeError",

"message": "Something went wrong",

"environment": "production",

"source": "api",

"timestamp": 1700000000

}Slack Integration

Set BLOOP_SLACK_WEBHOOK_URL to your Slack incoming webhook URL. Bloop formats the payload as a Slack message automatically.

Notification Channels

Alert rules can have multiple notification channels. Configure channels per-rule in the dashboard or via the API:

# Add a Slack channel to a rule

curl -X POST http://localhost:5332/v1/alerts/1/channels \

-H "Content-Type: application/json" \

-H "Cookie: session=YOUR_SESSION_TOKEN" \

-d '{"config": {"type": "slack", "webhook_url": "https://hooks.slack.com/services/XXX"}}'

# Add a generic webhook channel

curl -X POST http://localhost:5332/v1/alerts/1/channels \

-H "Content-Type: application/json" \

-H "Cookie: session=YOUR_SESSION_TOKEN" \

-d '{"config": {"type": "webhook", "url": "https://api.pagerduty.com/..."}}'

# Add an email channel

curl -X POST http://localhost:5332/v1/alerts/1/channels \

-H "Content-Type: application/json" \

-H "Cookie: session=YOUR_SESSION_TOKEN" \

-d '{"config": {"type": "email", "to": "oncall@example.com"}}'

# Test a rule's notifications

curl -X POST http://localhost:5332/v1/alerts/1/test \

-H "Cookie: session=YOUR_SESSION_TOKEN"Supported channel types:

| Type | Config | Description |

|---|---|---|

slack | webhook_url | Posts formatted messages to a Slack channel |

webhook | url | Sends JSON payload to any HTTP endpoint |

email | to | Sends alert emails via SMTP (requires SMTP config) |

Threshold Rule Example

Fire when a specific error exceeds a count threshold within a time window:

curl -X POST http://localhost:5332/v1/alerts \

-H "Content-Type: application/json" \

-H "Cookie: session=YOUR_SESSION_TOKEN" \

-d '{

"name": "High error rate on checkout",

"config": {

"type": "threshold",

"threshold": 100,

"window_secs": 3600

},

"project_id": "proj_abc123"

}'Setting Up Slack Webhooks

To receive Bloop alerts in Slack:

- Go to api.slack.com/apps and click Create New App → From scratch

- Name it (e.g., "Bloop Alerts") and select your workspace

- Under Features, click Incoming Webhooks and toggle it On

- Click Add New Webhook to Workspace, select the channel, and authorize

- Copy the webhook URL (starts with

https://hooks.slack.com/services/...)

Then add it as a channel on your alert rule:

curl -X POST http://localhost:5332/v1/alerts/1/channels \

-H "Content-Type: application/json" \

-H "Cookie: session=YOUR_SESSION_TOKEN" \

-d '{"config":{"type":"slack","webhook_url":"https://hooks.slack.com/services/T00/B00/xxx"}}'Or set BLOOP_SLACK_WEBHOOK_URL as a global fallback for all rules without channels.

Alert Management in the Dashboard

The dashboard provides a full alert management UI under Settings → Alerts:

- Create rules with type selection (new_issue, threshold) and optional project scoping

- Add channels per rule — each rule can notify multiple Slack channels and webhooks

- Enable/disable rules without deleting them

- Test notifications — sends a test payload to verify your channels are working

Cooldown Tuning

After an alert fires, it enters a cooldown period. During cooldown, the same rule won't fire again for the same fingerprint. This prevents alert fatigue during error storms.

| Config | Default | Description |

|---|---|---|

alerting.cooldown_secs | 900 (15 min) | Global cooldown between re-fires |

For high-volume environments, increase to 3600 (1 hour). For critical alerts, decrease to 300 (5 min).

Email Alerts (SMTP)

Bloop can send alert notifications via email using SMTP. Configure SMTP credentials in config.toml:

# ── SMTP (for email alerts) ──

[smtp]

enabled = true

host = "smtp.example.com"

port = 587

username = "alerts@example.com"

password = "your-smtp-password"

from = "bloop@example.com"

starttls = trueOr via environment variables:

BLOOP__SMTP__ENABLED=true

BLOOP__SMTP__HOST=smtp.example.com

BLOOP__SMTP__PORT=587

BLOOP__SMTP__USERNAME=alerts@example.com

BLOOP__SMTP__PASSWORD=your-smtp-password

BLOOP__SMTP__FROM=bloop@example.com

BLOOP__SMTP__STARTTLS=trueOnce SMTP is configured, add email channels to your alert rules from the dashboard (Settings → Alerts → Add Channel → Email) or via the API:

curl -X POST http://localhost:5332/v1/alerts/1/channels \

-H "Content-Type: application/json" \

-H "Cookie: session=YOUR_SESSION_TOKEN" \

-d '{"config": {"type": "email", "to": "oncall@example.com"}}'Email alerts require SMTP to be enabled (smtp.enabled = true). If SMTP is not configured, creating an email channel will succeed but emails won't be sent. Use the Test button on the alert rule to verify email delivery.

User Management

Bloop supports admin and regular user roles. Admins can manage projects, alert rules, users, invites, API tokens, source maps, and data retention settings.

Admin Role

The first user to register is automatically an admin. Additional users can be promoted or demoted from the dashboard (Settings → Users) or via the API.

Promote / Demote Users

# Promote a user to admin

curl -X PUT http://localhost:5332/v1/admin/users/USER_ID/role \

-H "Content-Type: application/json" \

-H "Cookie: bloop_session=YOUR_SESSION_TOKEN" \

-d '{"is_admin": true}'

# Demote an admin to regular user

curl -X PUT http://localhost:5332/v1/admin/users/USER_ID/role \

-H "Content-Type: application/json" \

-H "Cookie: bloop_session=YOUR_SESSION_TOKEN" \

-d '{"is_admin": false}'Self-demotion is not allowed. You cannot remove your own admin role. This ensures there is always at least one admin who can manage the system.

Dashboard Controls

In Settings → Users, admins see toggle buttons next to each user:

- Make Admin — Promotes a regular user to admin

- Demote — Removes admin privileges from another admin

- No button appears next to your own name (can't change own role)

Data Retention

Bloop automatically prunes old data based on configurable retention settings. By default, raw events are kept for 7 days and hourly aggregation counts for 90 days. Both values are configurable globally and per-project.

Dashboard Controls

Admins can manage retention from Settings → Data Retention:

- Global settings — Set default raw event and hourly count retention days

- Per-project overrides — Override retention days for specific projects (e.g., keep production data longer)

- Storage stats — View database size, raw event count, and hourly count rows

- Purge Now — Trigger an immediate retention run (with confirmation)

API

# Get current retention config + storage stats

curl http://localhost:5332/v1/admin/retention \

-H "Cookie: bloop_session=YOUR_SESSION_TOKEN"

# Update retention settings

curl -X PUT http://localhost:5332/v1/admin/retention \

-H "Content-Type: application/json" \

-H "Cookie: bloop_session=YOUR_SESSION_TOKEN" \

-d '{

"raw_events_days": 14,

"hourly_counts_days": 90,

"per_project": [

{"project_id": "proj_abc123", "raw_events_days": 30}

]

}'

# Trigger immediate purge

curl -X POST http://localhost:5332/v1/admin/retention/purge \

-H "Content-Type: application/json" \

-H "Cookie: bloop_session=YOUR_SESSION_TOKEN" \

-d '{"confirm": true}'Response: Retention Config

{

"global": {

"raw_events_days": 7,

"hourly_counts_days": 90

},

"per_project": [

{

"project_id": "proj_abc123",

"project_name": "Mobile App",

"raw_events_days": 30

}

],

"storage": {

"db_size_bytes": 12345678,

"raw_events_count": 50000,

"hourly_counts_count": 8760

}

}Retention runs automatically every hour (configurable via retention.prune_interval_secs). After large deletions (>10,000 rows), a VACUUM is run automatically to reclaim disk space.

Projects

Bloop supports multiple projects, each with its own API key. All errors, alerts, and source maps are scoped to a project.

Creating a Project

Go to Settings → Projects in the dashboard, or use the API:

curl -X POST http://localhost:5332/v1/projects \

-H "Content-Type: application/json" \

-H "Cookie: session=YOUR_SESSION_TOKEN" \

-d '{"name": "Mobile App", "slug": "mobile-app"}'The response includes the project's API key. Use this key in your SDK configuration:

{

"id": "proj_abc123",

"name": "Mobile App",

"slug": "mobile-app",

"api_key": "bloop_7f3a..."

}SDK Configuration

Pass the project's API key as projectKey in your SDK config. The SDK sends it as the X-Project-Key header for authentication and project routing:

const bloop = new BloopClient({

endpoint: "https://errors.myapp.com",

projectKey: "bloop_7f3a...",

environment: "production",

release: "1.0.0",

});A default project is created automatically on first startup using the legacy hmac_secret from config. SDKs that don't send X-Project-Key are routed to this default project for backward compatibility.

Source Maps

Upload JavaScript source maps to see deobfuscated stack traces in the dashboard. Bloop automatically maps minified frames back to original source files.

Uploading Source Maps

Upload via the dashboard (Settings → Source Maps) or the API:

curl -X POST http://localhost:5332/v1/projects/my-app/sourcemaps \

-H "Cookie: session=YOUR_SESSION_TOKEN" \

-F "release=1.2.0" \

-F "file=@dist/app.js.map"How It Works

- Upload a

.mapfile for a specific project and release version - When an error with a stack trace arrives for that release, Bloop matches frames against the source map

- The dashboard shows both the original and raw stack traces with a toggle

CI Integration

Add source map upload to your build pipeline:

# After building your app

for map in dist/*.map; do

curl -X POST https://errors.myapp.com/v1/projects/my-app/sourcemaps \

-H "Cookie: session=$BLOOP_SESSION" \

-F "release=$VERSION" \

-F "file=@$map"

doneSource maps are stored compressed (gzip) and cached in memory for fast lookups. Each map is uniquely identified by the combination of project, release, and filename.

File Naming & Matching

Bloop matches source map files to stack frames using the filename. When a stack frame references app.min.js, Bloop looks for a source map uploaded with that filename for the matching project and release.

| Upload filename | Matches frames from |

|---|---|

app.js.map | app.js |

dist/bundle.min.js.map | bundle.min.js |

main.abc123.js.map | main.abc123.js |

Multiple Maps per Release

You can upload multiple source maps for the same release — one per bundle file. Each is stored separately and matched independently.

# Upload all maps from a build

for map in dist/*.map; do

curl -X POST https://errors.myapp.com/v1/projects/my-app/sourcemaps \

-H "Cookie: session=$BLOOP_SESSION" \

-F "release=2.1.0" \

-F "file=@$map"

doneLimits & Storage

| Limit | Value |

|---|---|

| Max file size (uncompressed) | 50 MB |

| Supported formats | Source Map v3 (.map, .js.map) |

| Storage | Gzip-compressed in SQLite BLOB |

| In-memory cache | Parsed maps cached via moka (auto-evicted) |

Troubleshooting Source Maps

| Issue | Cause | Fix |

|---|---|---|

| Stack shows raw frames | No source map for that release | Upload the .map file matching the release version |

| Some frames deobfuscated, some not | Missing maps for some bundles | Upload maps for all bundle files, not just the main one |

| Wrong original locations | Source map doesn't match the deployed code | Ensure the .map file was generated from the same build as the deployed JS |

Analytics

Bloop includes an optional analytics engine powered by DuckDB for advanced error analysis. Enable it in your config to get spike detection, top movers, error correlations, release impact scoring, and environment breakdowns.

Feature-gated: Analytics requires building with cargo build --features analytics. It is not included in the default build. For Docker, use a build arg: docker build --build-arg FEATURES=analytics.

How It Works

DuckDB attaches the existing SQLite database file read-only via its sqlite_scanner extension. There is no data migration, no schema changes, and no impact on the write path. DuckDB runs in-memory and reads committed data from SQLite's WAL without blocking the writer.

Configuration

# ── Analytics (optional) ──

[analytics]

enabled = true

cache_ttl_secs = 60 # In-memory cache TTL for results (seconds)

zscore_threshold = 2.5 # Z-score threshold for spike detectionCommon Query Parameters

All analytics endpoints accept these query parameters:

| Param | Type | Default | Description |

|---|---|---|---|

project_id | string | — | Filter by project (auto-set with bearer tokens) |

hours | integer | 72 | Time window in hours (1–720) |

limit | integer | 20 | Max results (1–100) |

environment | string | — | Filter by environment |

Spike Detection

Detects hourly error spikes using z-score analysis. Returns fingerprints where the hourly count deviates significantly from the rolling mean. Only returns results where the z-score meets or exceeds the configured threshold (default: 2.5).

curl -H "Authorization: Bearer bloop_tk_..." \

"https://errors.myapp.com/v1/analytics/spikes?hours=24&environment=production"{

"spikes": [

{

"fingerprint": "a1b2c3",

"error_type": "TimeoutError",

"message": "Request timed out",

"hour_bucket": 1700006400000,

"count": 145,

"mean": 12.3,

"stddev": 8.1,

"zscore": 16.4

}

],

"threshold": 2.5,

"window_hours": 24

}Top Movers

Compares error counts between the current period and the previous period of equal length using a FULL OUTER JOIN. Surfaces errors that are increasing or decreasing the most, ranked by absolute delta.

curl -H "Authorization: Bearer bloop_tk_..." \

"https://errors.myapp.com/v1/analytics/movers?hours=24&limit=10"{

"movers": [

{

"fingerprint": "d4e5f6",

"error_type": "ConnectionError",

"message": "Database connection refused",

"count_current": 89,

"count_previous": 3,

"delta": 86,

"delta_pct": 2866.7

}

],

"window_hours": 24

}Error Correlations

Uses DuckDB's CORR() aggregate to find pairs of errors that tend to spike together within the same hourly windows. Only considers fingerprints with ≥6 data points and returns pairs with Pearson correlation ≥0.7. Useful for discovering root causes — when error A spikes, error B always follows.

curl -H "Authorization: Bearer bloop_tk_..." \

"https://errors.myapp.com/v1/analytics/correlations?hours=72"{

"pairs": [

{

"fingerprint_a": "a1b2c3",

"fingerprint_b": "d4e5f6",

"message_a": "Redis connection timeout",

"message_b": "Cache miss on user session",

"correlation": 0.94

}

],

"window_hours": 72

}Release Impact

Uses LAG() window functions to score releases by error impact: total errors, unique fingerprints, new fingerprints introduced, and a composite impact score calculated as (new_fingerprints * 10) + error_delta_pct.

curl -H "Authorization: Bearer bloop_tk_..." \

"https://errors.myapp.com/v1/analytics/releases?limit=5"{

"releases": [

{

"release": "2.3.0",

"first_seen": 1700000000000,

"error_count": 342,

"unique_fingerprints": 12,

"new_fingerprints": 5,

"error_delta": 180,

"impact_score": 8.7

}

]

}Environment Breakdown

Shows error distribution across environments with PERCENTILE_CONT(0.50/0.90/0.99) statistics for hourly error rates. Includes percentage of total errors and unique error counts per environment.

curl -H "Authorization: Bearer bloop_tk_..." \

"https://errors.myapp.com/v1/analytics/environments?hours=168"{

"environments": [

{

"environment": "production",

"total_count": 1250,

"unique_errors": 34,

"pct_of_total": 78.1,

"p50_hourly": 7.0,

"p90_hourly": 23.0,

"p99_hourly": 89.0

}

],

"total_count": 1600

}Upgrade Notes

v0.3 — Security Hardening

This release contains breaking changes that require attention before upgrading.

Breaking Changes

| Change | Impact | Action Required |

|---|---|---|

| HMAC secret minimum length | Server refuses to start if auth.hmac_secret is shorter than 32 characters. |

Before deploying, ensure your secret is ≥ 32 chars. Generate one with openssl rand -hex 32. |

| Session tokens hashed at rest | All existing sessions are invalidated on upgrade. Users must re-login via WebAuthn. | Schedule the upgrade during a low-traffic window. No data migration needed — old sessions are cleaned up automatically (migration 010). |

api_key removed from project list/get |

GET /v1/projects and GET /v1/projects/:slug no longer return the api_key field. API keys are only returned on POST /v1/projects (create) and POST /v1/projects/:slug/rotate-key. |

Update any scripts or dashboards that read api_key from GET responses. Capture the key on project creation or use rotate-key to obtain it. |

| Auth endpoint rate limiting | Login and registration endpoints are rate-limited to 5 req/sec per IP (configurable via rate_limit.auth_per_second and rate_limit.auth_burst_size). |

No action needed for normal usage. Adjust config values if your deployment has unusual auth traffic patterns. |

| Token route authorization | Token CRUD routes now require the user to be an admin, the project creator, or (for revocation) the token creator. | No action needed unless non-admin users were managing tokens for projects they didn't create. |

Security Fixes

- C-1: Session tokens are now SHA-256 hashed before database storage (prevents token theft on DB breach).

- H-1: Token CRUD routes enforce project membership (admin or project creator).

- H-2: API keys are no longer exposed in project list/get API responses.

- H-3: Bearer token cache re-validates

expires_aton every hit (prevents expired token reuse). - H-4:

/v1/releases/:release/errorsnow filters byproject_id(prevents cross-project data leakage). - H-7: Auth endpoints rate-limited to prevent brute-force attacks.

- H-8: HMAC secrets shorter than 32 characters are rejected at startup.

Production Checklist

Before going live, work through this checklist:

Security

| Item | Action | Why |

|---|---|---|

| HMAC secret | Set BLOOP__AUTH__HMAC_SECRET to a random 64+ character string |

This is the signing key for all SDK authentication. Use openssl rand -hex 32 to generate one. |

| WebAuthn origin | Set BLOOP__AUTH__RP_ID and BLOOP__AUTH__RP_ORIGIN to your domain |

Passkey registration fails silently if these don't match the browser's actual origin. |

| HTTPS | Always use TLS in production | WebAuthn requires a secure context. API keys and session cookies are transmitted in headers. |

| Project API keys | Create separate projects per app/service | Isolates errors and allows key rotation without affecting other services. |

Storage

| Item | Action | Why |

|---|---|---|

| Persistent volume | Mount a volume at /data |

SQLite stores everything in a single file. Without a volume, data is lost on container restart. |

| Retention policy | Set retention.raw_events_days (default: 7) |

Raw events are pruned after this period. Aggregates and samples are kept indefinitely. |

| Backups | Schedule regular SQLite backups | See Backups section below for a cron-based approach. |

Performance

| Item | Default | Guidance |

|---|---|---|

ingest.channel_capacity |

8192 | Buffer size for incoming events. Increase if you see buffer_usage > 0.5 on /health. |

pipeline.flush_batch_size |

500 | Events written per batch. Higher = better throughput, more write latency. |

database.pool_size |

4 | Read connections. Increase for high query concurrency (dashboard + API). |

rate_limit.per_second |

100 | Per-IP rate limit. Increase if a single server sends high volume legitimately. |

Monitoring

# Health check — use this for uptime monitoring

curl -sf https://errors.yourapp.com/health | jq .

# Expected response:

# { "status": "ok", "db_ok": true, "buffer_usage": 0.002 }

# Alert if buffer_usage > 0.5 or db_ok is falseDeploy to Railway

Railway is the fastest way to deploy Bloop. This guide walks through a complete production setup.

Step 1: Create the Project

- Go to railway.app/new and click Deploy from GitHub repo

- Select your Bloop fork (or use the template repo)

- Railway auto-detects the

Dockerfileand begins building

Step 2: Add a Persistent Volume

This is critical — without a volume, your database is lost on every deploy.

- In your service settings, go to Volumes

- Click Add Volume

- Set the mount path to

/data - Railway provisions the volume automatically (default 10 GB, expandable)

Do this before the first deploy completes. If Bloop starts without a volume, it creates the database in the ephemeral container filesystem, and you'll lose it on the next deploy.

Step 3: Set Environment Variables

Go to Variables in your service and add each of these:

| Variable | Value | Notes |

|---|---|---|

BLOOP__AUTH__HMAC_SECRET |

A random 64+ char string | Generate with openssl rand -hex 32. This is your master signing key. |

BLOOP__AUTH__RP_ID |

errors.yourapp.com |

Must match your custom domain exactly (no protocol, no port). |

BLOOP__AUTH__RP_ORIGIN |

https://errors.yourapp.com |

Full URL with https://. Must match what the browser sees. |

BLOOP__DATABASE__PATH |

/data/bloop.db |

Points to your persistent volume. |

RUST_LOG |

bloop=info |

Use bloop=debug for troubleshooting. |

Optional variables:

| Variable | Purpose |

|---|---|

BLOOP_SLACK_WEBHOOK_URL | Global Slack webhook for alerts (fallback for rules without channels) |

BLOOP_WEBHOOK_URL | Global generic webhook URL |

BLOOP__RATE_LIMIT__PER_SECOND | Ingestion rate limit per IP (default: 100) |

BLOOP__RETENTION__RAW_EVENTS_DAYS | How long to keep raw events (default: 7) |

Step 4: Add a Custom Domain

- In Settings → Networking → Custom Domain, enter your domain (e.g.,

errors.yourapp.com) - Railway provides the CNAME target — add it to your DNS

- Railway provisions a TLS certificate automatically

- Wait for DNS propagation (usually 1-5 minutes)

The domain must be configured before you register your first passkey. WebAuthn binds credentials to the origin. If you register on *.up.railway.app and later switch to a custom domain, those passkeys won't work on the new domain.

Step 5: Configure Health Check

- In Settings → Deploy, set the health check path to

/health - Set timeout to

10seconds - Railway will wait for a 200 response before routing traffic to the new deployment

Step 6: First Login & Project Setup

- Visit

https://errors.yourapp.com— you'll see the passkey registration screen - Register your passkey (fingerprint, Face ID, or hardware key)

- The first user is automatically an admin

- Go to Settings → Projects — a default project is created automatically

- Create additional projects for each app/service

- Copy the API key and follow the SDK snippets shown below each project

Step 7: Verify Integration

Send a test error to confirm everything is working:

# Replace with your actual values

API_KEY="your-project-api-key"

ENDPOINT="https://errors.yourapp.com"

BODY='{"timestamp":'$(date +%s)',"source":"api","environment":"production","release":"1.0.0","error_type":"TestError","message":"Hello from Bloop!"}'

SIG=$(echo -n "$BODY" | openssl dgst -sha256 -hmac "$API_KEY" | awk '{print $2}')

curl -s -w "\nHTTP %{http_code}\n" \

-X POST "$ENDPOINT/v1/ingest" \

-H "Content-Type: application/json" \

-H "X-Signature: $SIG" \

-H "X-Project-Key: $API_KEY" \

-d "$BODY"

# Expected: HTTP 200

# Check the dashboard — your test error should appear within 2 secondsRailway Resource Usage

Bloop is lightweight. Typical Railway resource usage:

| Metric | Idle | Moderate load (1k events/min) |

|---|---|---|

| Memory | ~20 MB | ~50 MB |

| CPU | < 1% | ~5% |

| Disk | ~15 MB (binary) | Depends on volume and retention |

Railway's Hobby plan ($5/month) is more than sufficient for most use cases.

Deploy to Dokploy

- Add application: In your Dokploy dashboard, create a new application from your Git repository.

- Build configuration: Select "Dockerfile" as the build method. Dokploy will use the Dockerfile in the repo root.

- Environment variables: Add the same variables listed in the Railway guide in the Environment tab.

-

Volume mount: Add a persistent volume:

yaml

Host path: /opt/dokploy/volumes/bloop Container path: /data -

Domain & SSL: Add your domain in the Domains tab. Enable "Generate SSL" for automatic Let's Encrypt certificates. Set the container port to

5332. -

Health check: Set the health check path to

/healthand port to5332.

Then follow Steps 6 and 7 from the Railway guide for first login and verification.

Deploy to Docker / VPS

Run Bloop on any server with Docker installed.

Quick Start

docker run -d --name bloop \

--restart unless-stopped \

-p 5332:5332 \

-v bloop_data:/data \

-e BLOOP__AUTH__HMAC_SECRET=$(openssl rand -hex 32) \

-e BLOOP__AUTH__RP_ID=errors.yourapp.com \

-e BLOOP__AUTH__RP_ORIGIN=https://errors.yourapp.com \

-e BLOOP__DATABASE__PATH=/data/bloop.db \

-e RUST_LOG=bloop=info \

ghcr.io/jaikoo/bloop:latestWith Docker Compose

# docker-compose.yml

services:

bloop:

image: ghcr.io/jaikoo/bloop:latest

restart: unless-stopped

ports:

- "5332:5332"

volumes:

- bloop_data:/data

environment:

BLOOP__AUTH__HMAC_SECRET: "${BLOOP_SECRET}"

BLOOP__AUTH__RP_ID: errors.yourapp.com

BLOOP__AUTH__RP_ORIGIN: https://errors.yourapp.com

BLOOP__DATABASE__PATH: /data/bloop.db

RUST_LOG: bloop=info

healthcheck:

test: ["CMD", "curl", "-sf", "http://localhost:5332/health"]

interval: 30s

timeout: 5s

retries: 3

volumes:

bloop_data:Reverse Proxy (Nginx)

Place Bloop behind a reverse proxy for TLS termination:

server {

listen 443 ssl http2;

server_name errors.yourapp.com;

ssl_certificate /etc/letsencrypt/live/errors.yourapp.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/errors.yourapp.com/privkey.pem;

location / {

proxy_pass http://127.0.0.1:5332;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Important for large source map uploads

client_max_body_size 50m;

}

}If using Caddy instead, it handles TLS automatically: errors.yourapp.com { reverse_proxy localhost:5332 }

Backups

Bloop stores all data in a single SQLite file. Back it up with a simple copy or the SQLite .backup command.

Safe Hot Backup

SQLite's .backup command creates a consistent snapshot without stopping the server:

# From the host (Docker)

docker exec bloop sqlite3 /data/bloop.db ".backup /data/backup.db"

docker cp bloop:/data/backup.db ./bloop-backup-$(date +%Y%m%d).db

# Or directly on the volume

sqlite3 /var/lib/docker/volumes/bloop_data/_data/bloop.db \

".backup /tmp/bloop-backup.db"Automated Daily Backups

#!/bin/bash

# /opt/bloop/backup.sh — run via cron

BACKUP_DIR="/opt/bloop/backups"

KEEP_DAYS=14

mkdir -p "$BACKUP_DIR"

# Create backup

docker exec bloop sqlite3 /data/bloop.db \

".backup /data/backup-tmp.db"

docker cp bloop:/data/backup-tmp.db \

"$BACKUP_DIR/bloop-$(date +%Y%m%d-%H%M).db"

docker exec bloop rm /data/backup-tmp.db

# Prune old backups

find "$BACKUP_DIR" -name "bloop-*.db" -mtime +$KEEP_DAYS -delete

echo "Backup complete: $(ls -lh $BACKUP_DIR/bloop-*.db | tail -1)"# Add to crontab: daily at 3 AM

echo "0 3 * * * /opt/bloop/backup.sh >> /var/log/bloop-backup.log 2>&1" | crontab -Railway Backups

Railway volumes support snapshots. You can also back up by running a one-off command:

# Connect to Railway service shell

railway shell

# Inside the container

sqlite3 /data/bloop.db ".backup /data/bloop-backup.db"

# Download via Railway CLI

railway volume download /data/bloop-backup.dbRestoring from Backup

# Stop the server first

docker stop bloop

# Replace the database

docker cp ./bloop-backup-20240115.db bloop:/data/bloop.db

# Restart

docker start bloopAlways stop the server before restoring. SQLite can corrupt if you overwrite a database file while it's in use. The WAL journal (bloop.db-wal) must also be consistent — using .backup ensures this.

Dashboard Guide

The Bloop dashboard is a single-page application served at the root URL of your Bloop instance.

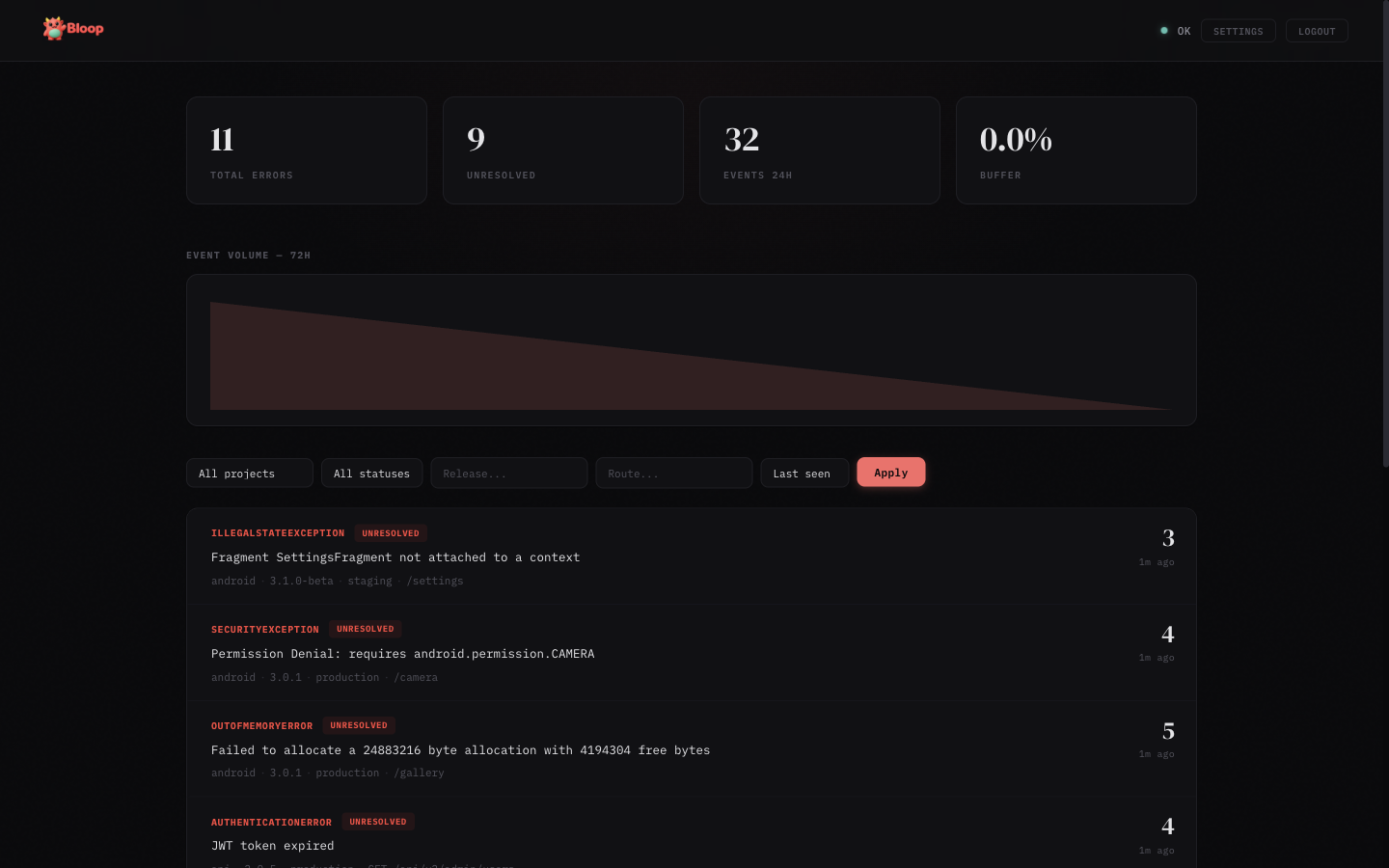

Main View

- Stats bar — Shows total errors, unresolved count, events in the last 24 hours, and buffer usage

- Trend chart — SVG area chart showing hourly event volume over the last 72 hours

- Error list — Aggregated errors with sparklines, sorted by last seen. Each row shows error type, message, count, source badge, and a 24-hour trend sparkline

- Filters — Filter by project, status (unresolved/resolved/ignored/muted), release, route, and sort order

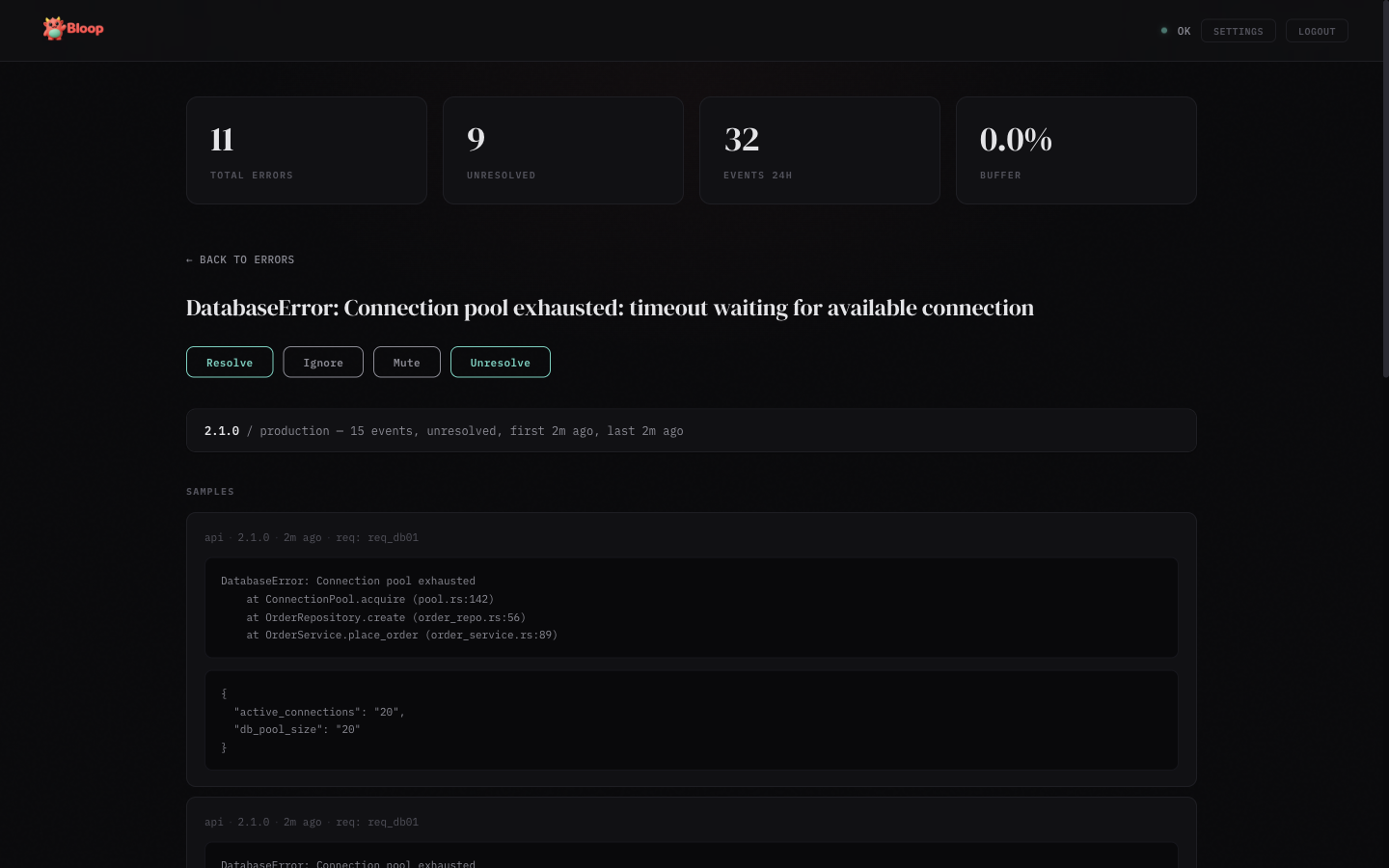

Error Detail

Click any error row to see the detail view:

- Aggregates — Breakdown by release and environment with event counts and timestamps

- Samples — Up to 5 sample occurrences with stack traces, metadata, and source info

- Deobfuscated stacks — When source maps are uploaded, a toggle appears to switch between Original and Raw stack views

- Actions — Resolve, Ignore, Mute, or Unresolve the error

- Status timeline — Audit trail of all status changes with timestamps and who made them

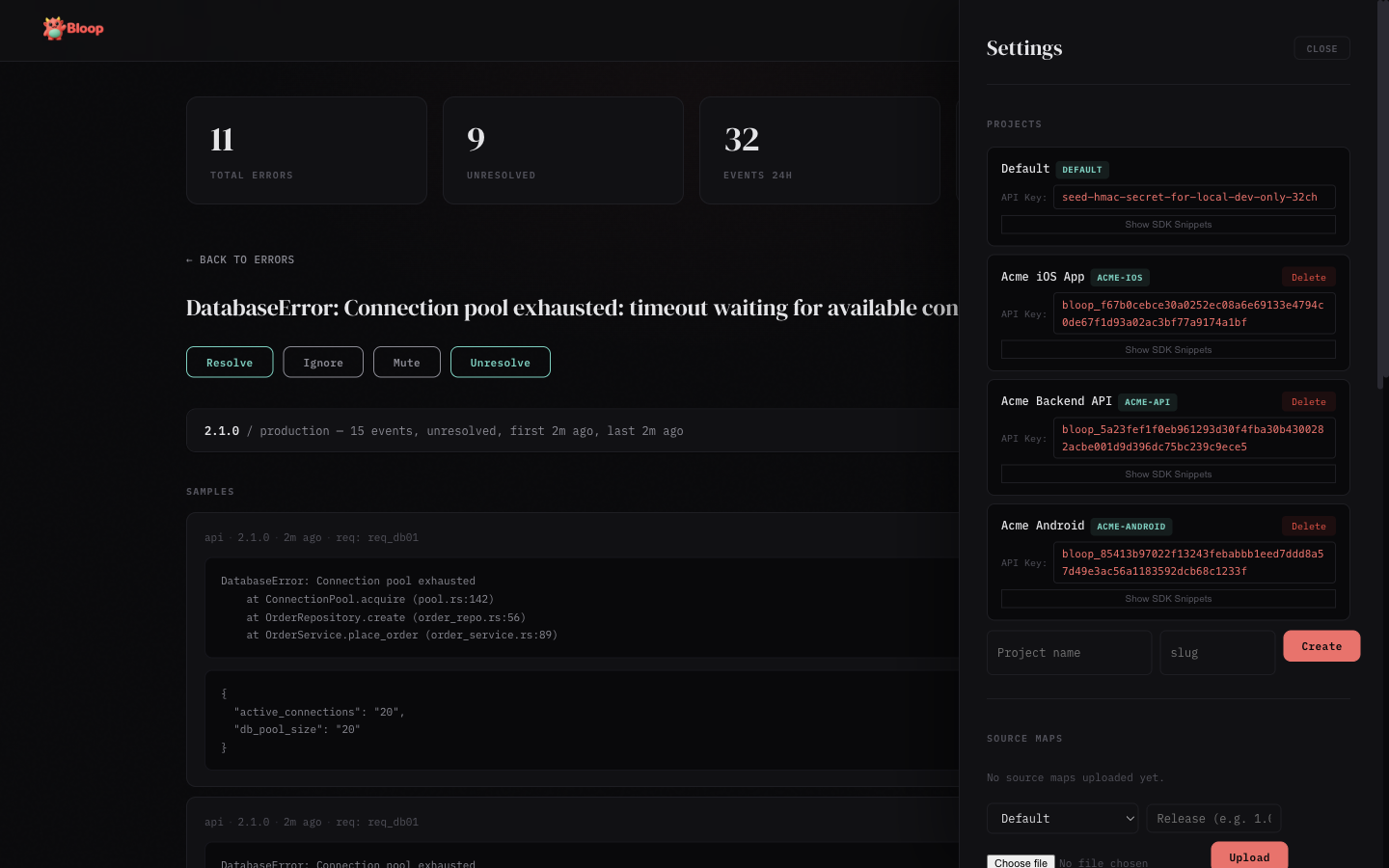

Settings

Click the gear icon in the header to open the settings panel (admin only):

- Projects — Create, view, and delete projects. Each project shows its full API key with click-to-copy and expandable SDK snippets

- API Tokens — Create, list, and revoke scoped bearer tokens for programmatic access. Tokens are project-scoped with granular permissions (errors, sourcemaps, alerts)

- Source Maps — Upload

.mapfiles per project and release, view and delete uploaded maps - Alerts — Create and manage alert rules, add notification channels (Slack, webhook, email), enable/disable and test rules

- Users — View registered users, promote/demote admin roles, and remove accounts

- Invites — Generate invite links for new users

- Data Retention — Configure global and per-project retention periods, view storage stats, and trigger manual purges

Insights Panel

When the analytics feature is enabled (--features analytics), an Insights button appears in the header. The button is hidden when analytics is not compiled in — the dashboard probes /v1/analytics/spikes on load and only shows the button if it gets a 200 response.

The Insights panel has five sub-tabs:

- Spikes — Anomalous errors detected via z-score analysis. Each row shows a horizontal bar (width = z-score severity), the error message, event count, and z-score value. Color-coded: dim for z≥2.5, coral for z≥3, red for z≥4

- Movers — Errors with the largest absolute change between the current and previous time window. Shows delta arrows, count comparison, and percentage badges

- Correlations — Pairs of errors that tend to spike together (Pearson correlation ≥0.7, requiring ≥6 data points). Useful for root cause discovery

- Releases — Impact score per release combining new fingerprints and error count deltas. Cards are color-coded: red = high impact (score >50), green = improvement (score <0), neutral otherwise

- Environments — Table showing each environment's total count, percentage bar, unique error count, and P50/P90/P99 hourly rate percentiles

Results are cached for 60 seconds (configurable via cache_ttl_secs). All queries respect the active project filter.

Error Lifecycle

Every error in Bloop has a status that tracks its lifecycle:

| Status | Meaning | Alerts fire? |

|---|---|---|

unresolved | Active issue that needs attention | Yes |

resolved | Fixed and deployed | Yes (regression) |

ignored | Known issue, not worth fixing | No |

muted | Temporarily silenced | No |

Status Transitions

All status changes are recorded in an audit trail visible in the error detail view.

- New error →

unresolved(automatic) - Resolve →

resolved— marks the error as fixed - Ignore →

ignored— permanently suppresses alerts for this error - Mute →

muted— temporarily suppresses alerts - Unresolve →

unresolved— re-opens the error for attention

Regression Detection

If a resolved error receives a new occurrence, Bloop can detect the regression. The error appears again in the unresolved list with its full history preserved.

API

# Resolve

curl -X POST http://localhost:5332/v1/errors/FINGERPRINT/resolve \

-H "Cookie: session=YOUR_SESSION_TOKEN"

# Mute

curl -X POST http://localhost:5332/v1/errors/FINGERPRINT/mute \

-H "Cookie: session=YOUR_SESSION_TOKEN"

# Unresolve

curl -X POST http://localhost:5332/v1/errors/FINGERPRINT/unresolve \

-H "Cookie: session=YOUR_SESSION_TOKEN"

# View status history

curl http://localhost:5332/v1/errors/FINGERPRINT/history \

-H "Cookie: session=YOUR_SESSION_TOKEN"Troubleshooting

Common Issues

| Problem | Cause | Solution |

|---|---|---|

401 Unauthorized on ingest |

HMAC signature doesn't match | Ensure you're signing the exact request body with the correct API key. The signature must be a hex-encoded HMAC-SHA256 of the raw JSON body. |

401 invalid project key |

API key not recognized | Check the X-Project-Key header matches a project's API key in Settings → Projects. Keys are case-sensitive. |

| Events accepted but not appearing | Buffer full or processing delay | Check /health endpoint — if buffer_usage is near 1.0, the pipeline is backed up. Events are batched every 2 seconds. |

| Passkey registration fails | rp_id / rp_origin mismatch |

The rp_id must match your domain (e.g., errors.myapp.com) and rp_origin must match the full URL including protocol. |

| Source maps not deobfuscating | Release version mismatch | The release in the source map upload must exactly match the release field sent with the error event. |

| Slack notifications not arriving | Webhook URL expired or channel archived | Test the alert via Settings → Alerts → Test. Check that the Slack app is still installed and the channel exists. |

| Dashboard shows stale data | Browser caching | Hard refresh (Cmd+Shift+R / Ctrl+Shift+R). Stats auto-refresh every 30 seconds. |

| High memory usage | Large moka cache | Source maps and aggregates are cached in memory. Restart the server to clear caches, or reduce the number of uploaded source maps. |

500 Unable To Extract Key! |

Rate limiter can't determine client IP | This happens when running behind a reverse proxy (Traefik, Nginx) that doesn't forward client IP headers. Upgrade to the latest Bloop release, which uses SmartIpKeyExtractor to read X-Forwarded-For and X-Real-IP headers automatically. |

403 Forbidden with bearer token |

Token lacks required scope | Check the token's scopes. Read operations require *:read scopes, write operations require *:write scopes. Create a new token with the needed scopes. |

Checking Server Health

curl http://localhost:5332/health | jq .

# Response:

# {

# "status": "ok",

# "db_ok": true,

# "buffer_usage": 0.002

# }buffer_usage shows the MPSC channel fill ratio (0.0 = empty, 1.0 = full). If consistently above 0.5, consider increasing ingest.channel_capacity or pipeline.flush_batch_size.

Debug Logging

# Enable detailed logging

RUST_LOG=bloop=debug cargo run

# Or in Docker

docker run -e RUST_LOG=bloop=debug ...Debug logging shows every ingested event, fingerprint computation, batch write, and alert evaluation.